TL;DR:We present GO-SLAM, a deep-learning-based dense visual SLAM framework globally optimizing poses and 3D reconstruction in real-time.

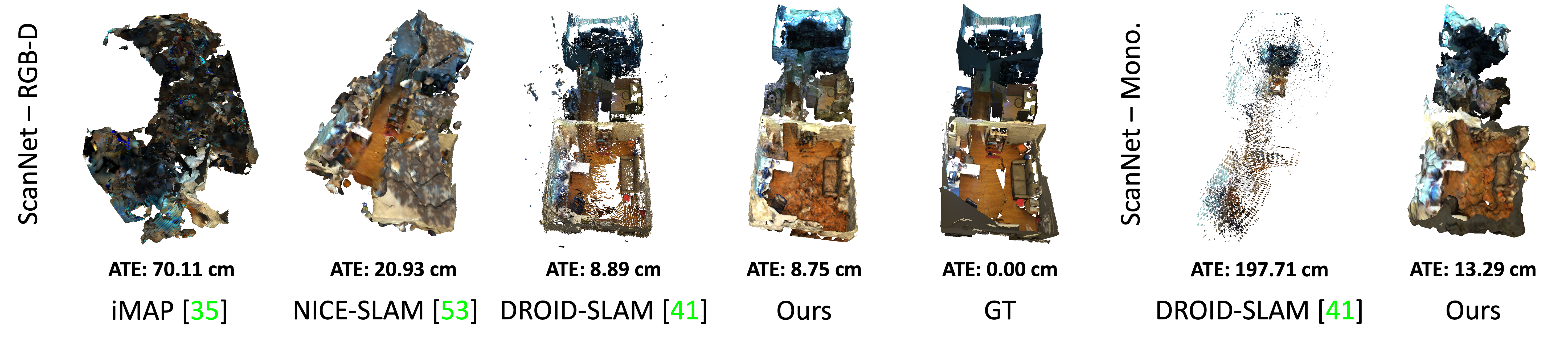

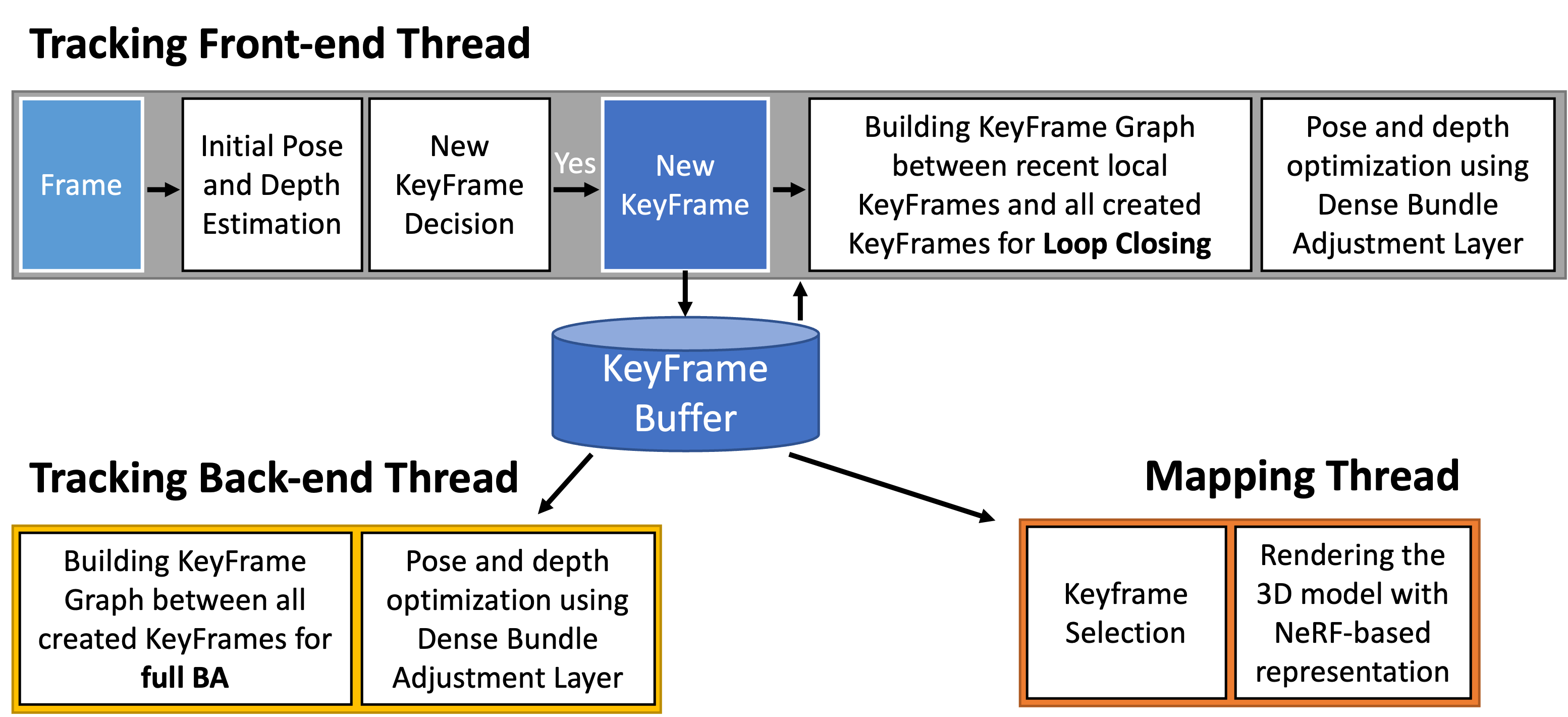

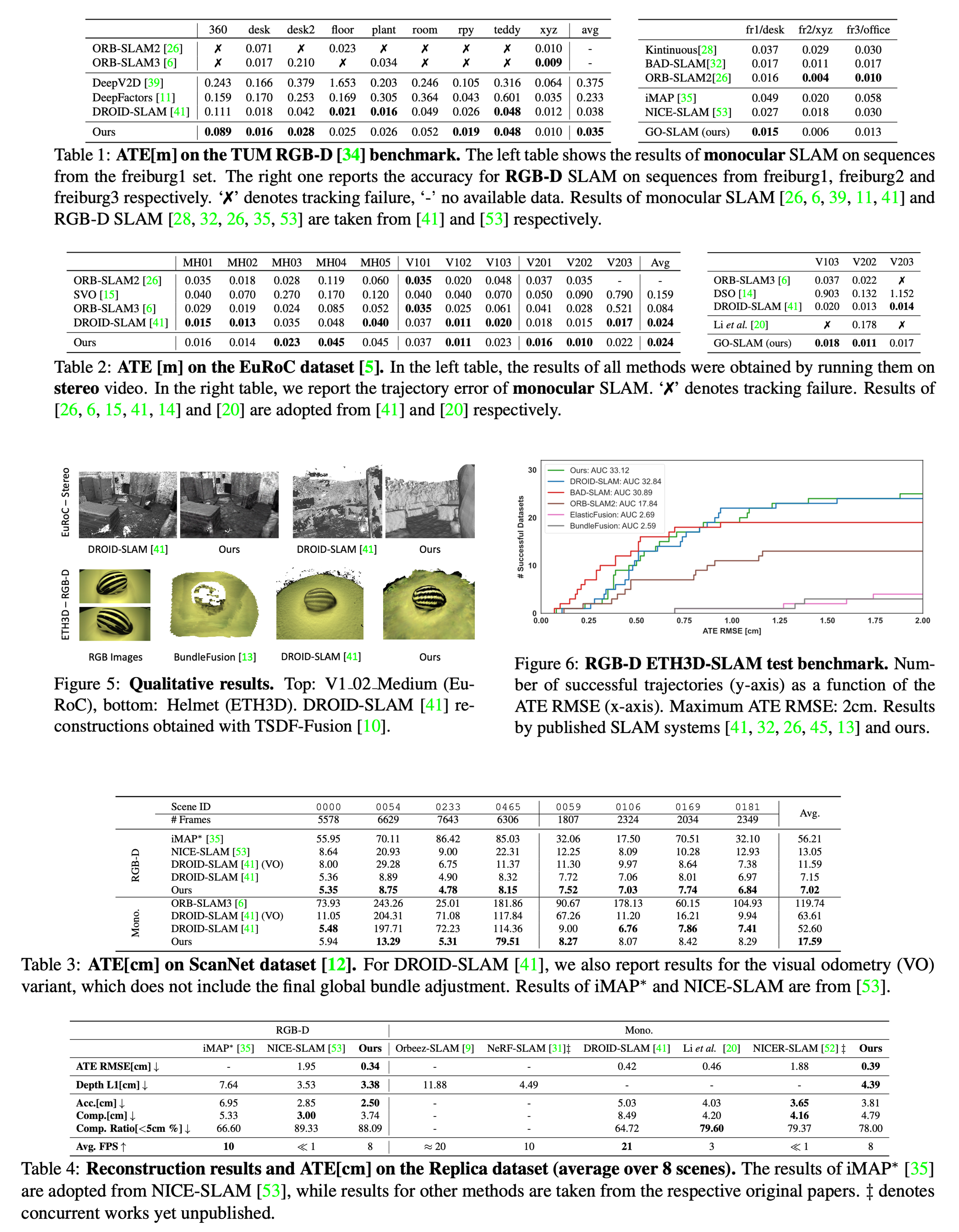

Neural implicit representations have recently demonstrated compelling results on dense Simultaneous Localization And Mapping (SLAM) but suffer from the accumulation of errors in camera tracking and distortion in the reconstruction. Purposely, we present GO-SLAM, a deep-learning-based dense visual SLAM framework globally optimizing poses and 3D reconstruction in real-time. Robust pose estimation is at its core, supported by efficient loop closing and online full bundle adjustment, which optimize per frame by utilizing the learned global geometry of the complete history of input frames. Simultaneously, we update the implicit and continuous surface representation on-the-fly to ensure global consistency of 3D reconstruction. Results on various synthetic and real-world datasets demonstrate that GO-SLAM outperforms state-of-the-art approaches at tracking robustness and reconstruction accuracy. Furthermore, GO-SLAM is versatile and can run with monocular, stereo, and RGB-D input.

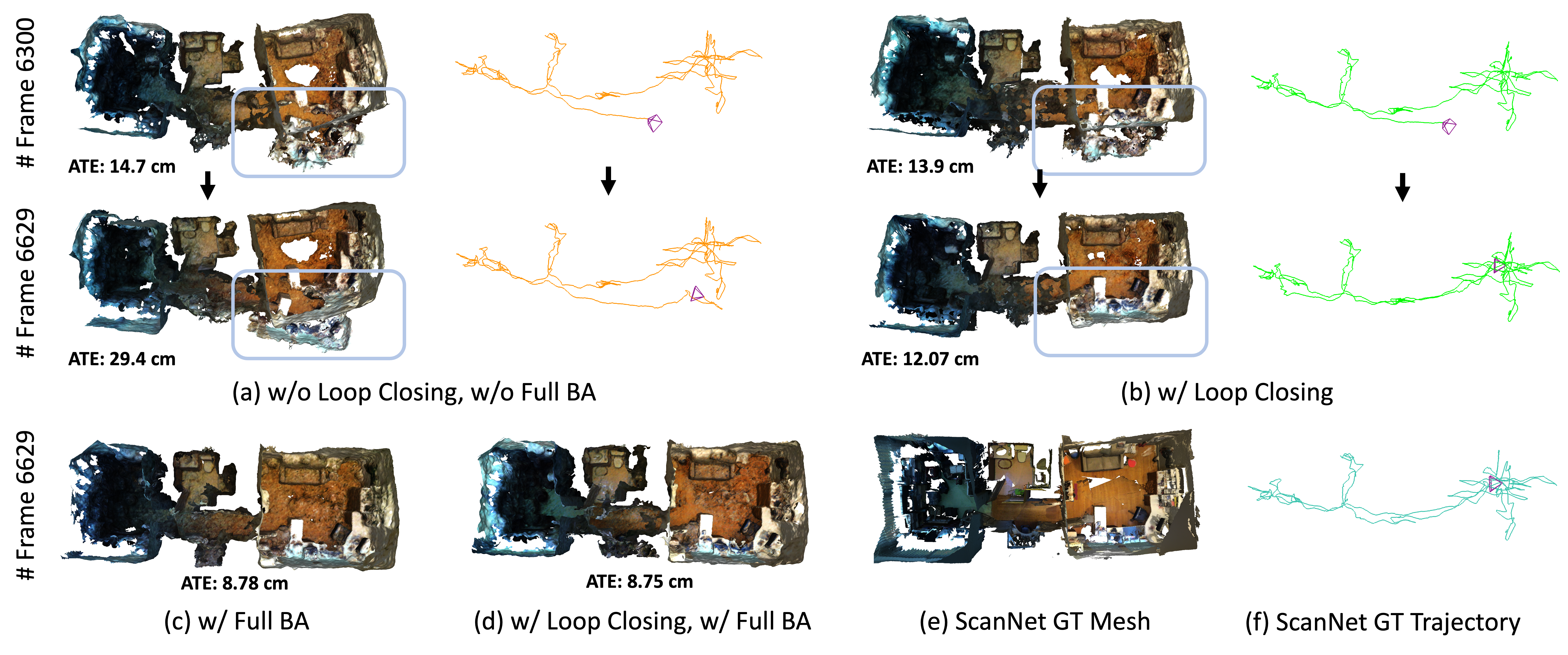

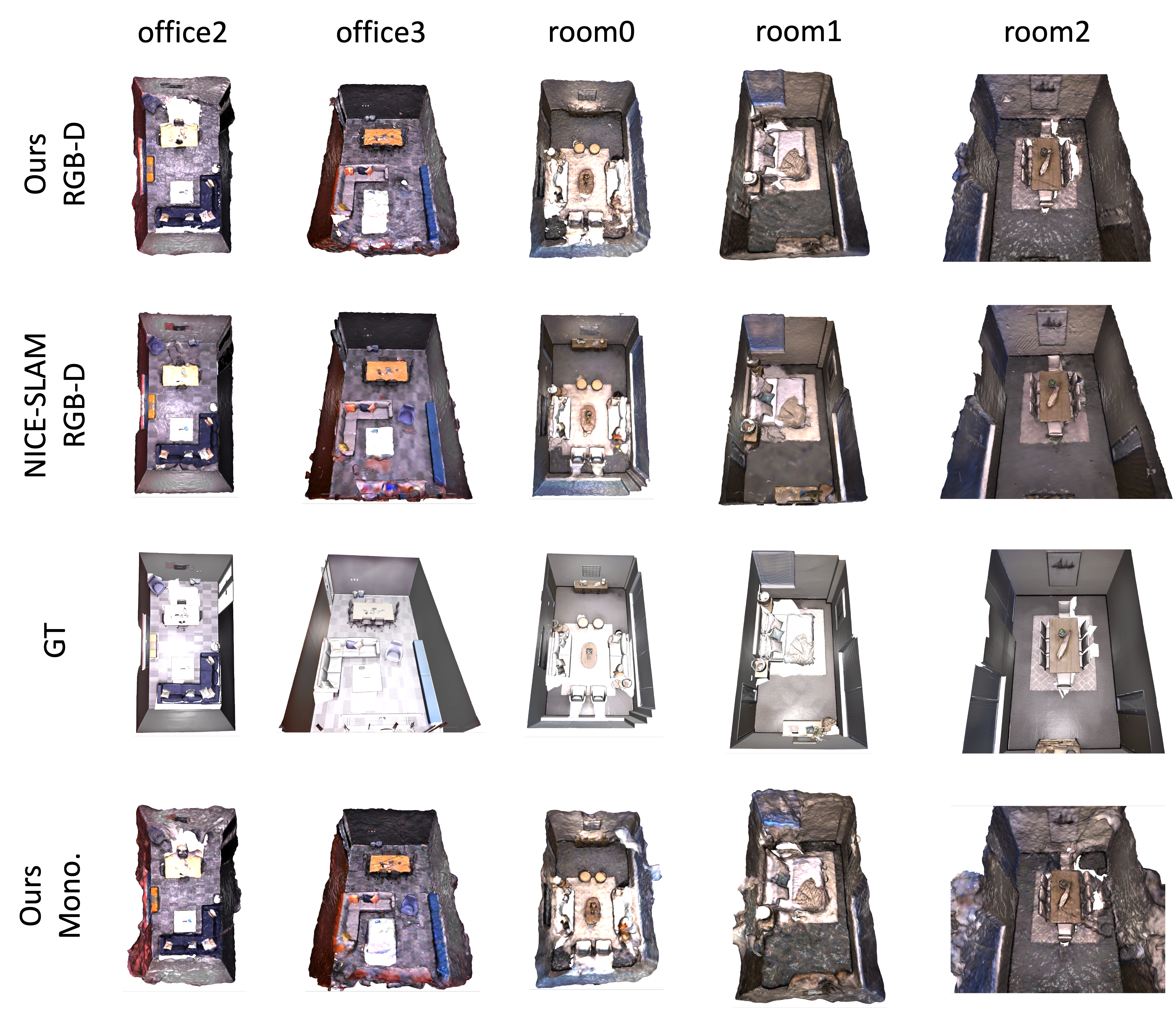

Qualitatives examples of LC and full BA on scene0054-00 of ScanNet with a total of 6629 frames. In (a), a significant error accumulates when no global optimization is available. With loop closing (b), the system is able to eliminate the trajectory error using global geometry. Additionally, online full BA optimizes (c) the poses of all existing keyframes. The final model (d), which integrates both loop closing and full BA, achieves a more complete and accurate 3D model prediction.

We test our SLAM in RGB-D mode on the ScanNet dataset and compare to state-of-the-art methods. Our approach achieves significantly global-consistent reconstruction results.

We test our SLAM in Stereo mode on the EuRoC dataset and compare to state-of-the-art methods. Compared to the noisy result of DROID-SLAM with several holes and floating points, GO-SLAM produces a more complete, smoother surface and a cleaner reconstruction

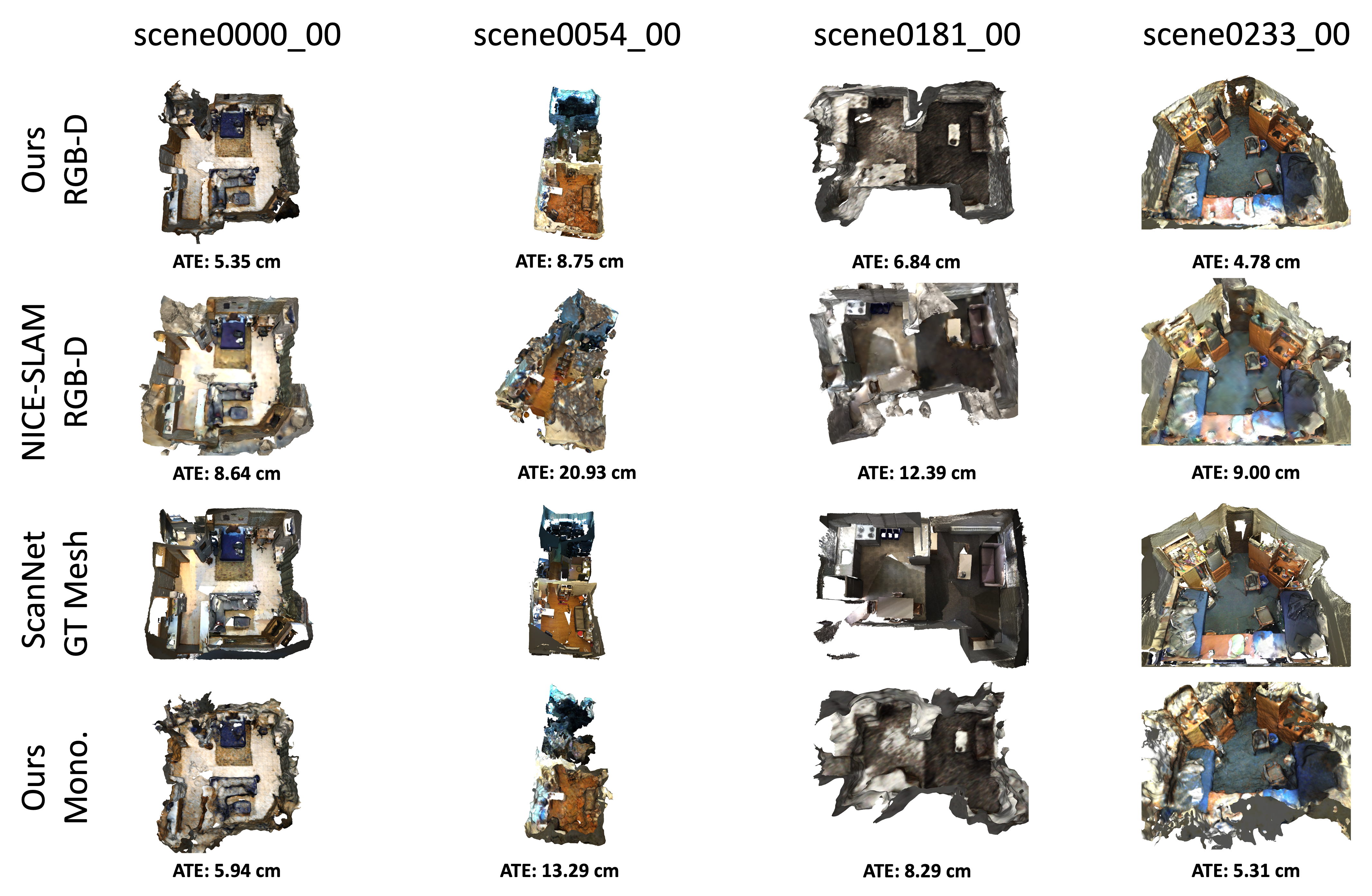

Our GO-SLAM support both Monocular and RGB-D modes and we test on the Replica dataset.

@proceedings{zhang2023goslam,

title = {GO-SLAM: Global Optimization for Consistent 3D Instant Reconstruction},

author = {Zhang, Youmin and Tosi, Fabio and Mattoccia, Stefano and Poggi, Matteo},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023}

}